The system brain or ‘sysbrain’ could be used for guiding autonomous satellites and spacecraft but project leader Prof Sandor Veres, from Southampton University, said the system has ‘enormous scope’ for industry and consumers.

‘Essentially we’ve developed a system to give some intelligence to machines, not as human intelligence but specific to particular tasks such as spatiotemporal awareness and avoiding dangerous situations,’ he said. ‘Think about what a spacecraft would need in a mission through the asteroid belt.’

The unique thing about the sysbrain as a control system is that it uses natural language programming (NLP), so it can understand and interpret English-language technical documents.

‘I wanted to enable engineers, who are not highly versed in programming and in [software] agents, to be able to define behaviours, goals, plans and execution sequences — basically solutions for problems — as easily as possible.’

What’s more, engineers could write documents that might enhance the physical or problem-solving skills of robots and machines then put them on the internet to share, essentially creating a community analogous to the open-source publishing model.

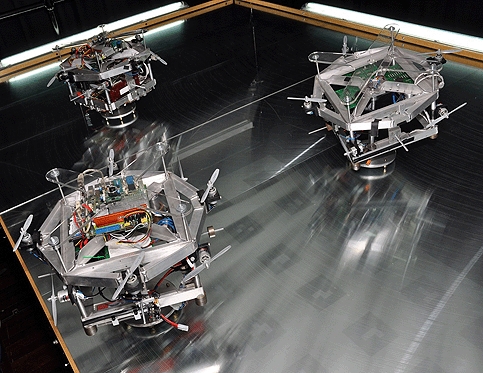

To demonstrate the technology, the researchers have developed a hardware and software ‘autonomous systems testbed’ that consists of a glass table on which model satellites equipped with sysbrains glide on roller bearings — almost without friction, to mimic the zero-gravity properties of space.

Each model has eight propellers to control movement, a set of inertia sensors and additional cameras to be ‘spatially aware’ and to ‘see’ each other.

Veres said the technology is now essentially in place and wants to explore a range of other applications with industry partners. He even envisages household robots controlled by sysbrains, which have greater analytical and interpretative abilities and can constantly be updated by their owners.

‘The manual is equal to the code, so you would buy a pet robot that comes with a manual that is written in sentences where the subsections define each activity.

‘We could eventually make machines understand totally natural [spoken] language — because we don’t speak by precise grammar in casual speech, really the machine has to work out what you want to do… this could become reality.’

The work is supported by the European Space Agency and EPSRC and Veres is currently in talks with Google regarding a natural-language database and mobile applications.

Swiss geoengineering start-up targets methane removal

No mention whatsoever about the effect of increased methane levels/iron chloride in the ocean on the pH and chemical properties of the ocean - are we...