In the age of big data, cloud and the internet of things, our thirst for computing power has never been greater. Many of our readers will be familiar with Moore’s law, the observation that predicts a doubling of computing capability every two years, as integrated circuits are packed ever denser with increasingly smaller transistors. This general rule of thumb has held firm over the past number of decades, but the pace of progress is now beginning to slow, with Intel’s CEO noting last year that the rate is slipping closer to two and a half years.

As we push against the current limits of nanotechnology and chip fabrication, this slowdown is inevitable, and likely to get worse over time. How then, will the next great leap forward in computing be achieved? Rather than relying on increasing the raw computing power of integrated circuits, the answer could potentially be found through a reimagining of microchip architecture.

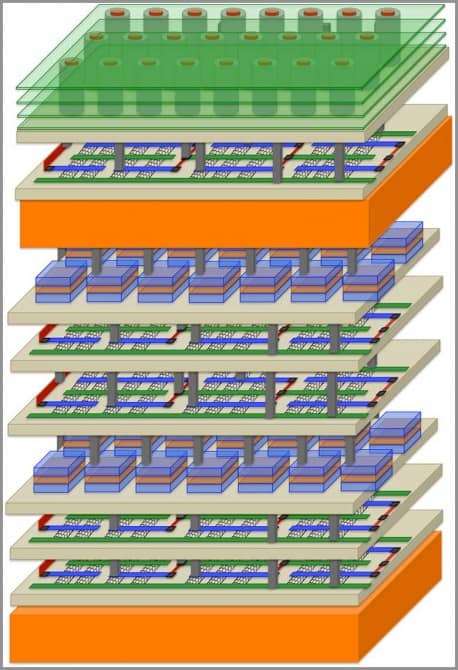

A Stanford-led project recently published its work on a skyscraper-style chip design, where layers of memory and transistors are stacked one on top of the other. The new approach has been labelled Nano-Engineered Computing Systems Technology, or N3XT. According to research co-lead Subhasish Mitra, the technology could herald a new era of computational power.

“The reason we are trying to do this is because the question is how to get the next 1000x of computing performance,” says Mitra, an associate professor of electrical engineering and computer science at Stanford. “Clearly, the way things are going now, you’re not going to get a 1000x (leap) in computing performance.”

“You have to look at what are the driving applications for computing, and those are what I call abundant data computing. That is, computing on lots of data, both in the cloud and from other sources.”

In today’s computers, processors and memory are laid out side-by-side, comparable to a suburban sprawl. Moving data around this configuration takes time and energy in the same way that physically getting from A to B in a suburb does when walking or driving.

“If you really look at where the energy is spent, it’s spent sending the data back and forth from memory to compute,” Mitra explains. “So to get the next 1000x of benefit, one has to think about not only the transistor, but also think about how to get better memory access.”

“To be able to do that, you have to have memory that is very close to computing. People have talked about this for a very long time. You hear people talking about computing in memory, memory in compute etc. The real question is how do you realise the next-gen architecture that can accomplish that.”

The simple answer is to build upwards. Naturally, Mitra and his colleagues are not the first to contemplate this straightforward solution, but traditional chip manufacturing methods have made it difficult in practice. Fabricating a silicon chip requires temperatures close to 1,000°C, and this makes it extremely challenging to build one layer on another without damaging the chip below. Current methods rely on constructing two chips separately, then stacking and joining them with thousands of tiny wires. However, these 3D chips are susceptible to data traffic jams due to the relatively low number of connecting wires compared to the number of transistors.

The Stanford team’s N3XT architecture relies on a different technique. Instead of silicon, it uses carbon nanotubes (CNTs). CNTs are faster and more energy efficient than silicon processors, and more importantly they allow for layers of transistors and memory to be stacked. The different ‘floors’ of the high-rise chip are connected by millions of minute pathways known as “vias”, which are integrated into the build process.

“The carbon nanotube transistors and the kinds of materials that we use for memory, you can actually fabricate the transistors and the memory at a very low temperature, at around 300°C,” says Mitra. “That’s why you can build the ideal architecture for computation next to memory.”

Along with Mitra, the N3XT consortium is co-led by fellow Stanford professor H.-S. Philip Wong. The pair have so far been joined by colleagues from several US universities, as well as numerous academics from within Stanford. Those academics include paper co-author Chris Ré, who says he joined the collaboration to make sure that computing doesn’t enter what some refer to as a "dark data" era.

"There are huge volumes of data that sit within our reach and are relevant to some of society's most pressing problems from health care to climate change, but we lack the computational horsepower to bring this data to light and use it," says Ré. “As we all hope in the N3XT project, we may have to boost horsepower to solve some of these pressing challenges.”

Report highlights significant impact of manufacturing on UK economy

I am not convinced that the High Value Manufacturing Centres do anything to improve the manufacturing processes - more to help produce products (using...