This is a call to engineers to stand up and demand the prohibition of autonomous lethal targeting by robots. I ask this of engineers because you are the ones who know just how limited machines can be when it comes to making judgments; judgments that only humans should make; judgments about who to kill and when to kill them.

Warfare is moving rapidly towards greater automation where hi-tech countries may fight wars without risk to their own forces. We have seen an exponential rise in the use of drones in Iraq and Afghanistan and for CIA targeted killings and signature strikes in Pakistan, Yemen and Somalia. Now more than 70 states have acquired or are developing military robotics technology.

The current drones are remote-controlled for use against low-tech communities in a permissive air space. With a delay time of 1.5 to 4 seconds from moving the joystick to motor response, air-to-air combat or avoiding anti-aircraft fire are problematic. Moreover, technologically sophisticated opponents would adopt counter strategies that could render drones useless by jamming communications.

Fully autonomous drones that seek and engage targets without communicating with an operator are not restricted by human G-force or response-time limitations allowing sharp turns and maneuvers at supersonic speeds. So taking the human out of the control loop has been flagged as important by all of the US military roadmaps since 2004. This would enable the US to lower the number of required personnel in the field, reduce costs, and decrease operational delays. But they also fundamentally reduce our humanity.

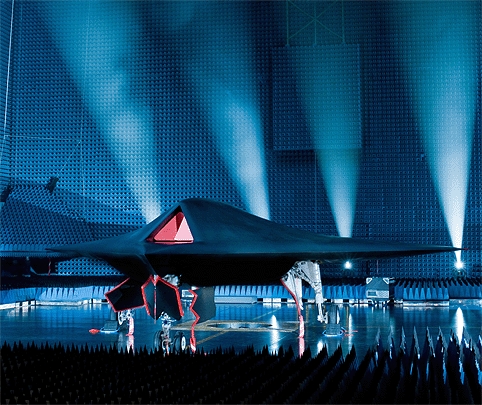

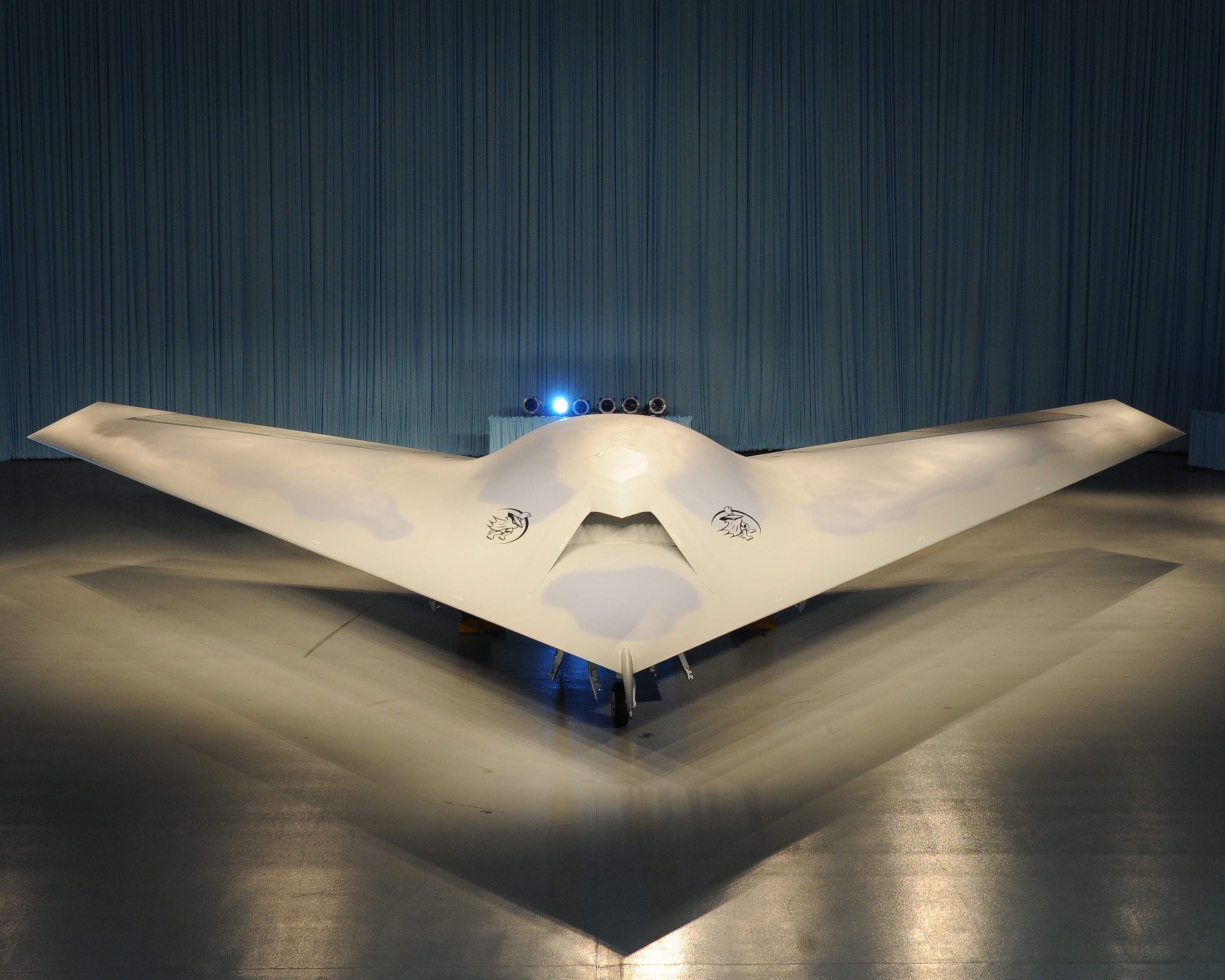

The UK company BAE systems will be testing its Taranis intercontinental autonomous combat aircraft in Australia this Spring. The US has been testing the fully autonomous subsonic Phantom Rayand the X-47b, due to appear on US aircraft carriers in the Pacific around 2019. Meanwhile, the Chinese Shenyang Aircraft Company is working on the Anjian supersonic unmanned fighter aircraft, the first drone designed for aerial dogfights.

The US HTV-2 program to develop armed hypersonic drones has tested the Falcon at 13,000 mph. The aim is to reach anywhere on the planet with an unmanned combat aircraft within 60 minutes. The hypersonic fully autonomous drones of the future would create very powerful weapons capable of making decisions well outside the speed of plausible human intervention.

A big problem is that autonomous weapons would not be able to comply with International Humanitarian Law (IHL) and other safeguards necessary to protect civilians in armed conflict. There are no computer systems capable of distinguishing civilians from combatants or making intuitive judgments about the appropriateness of killing in the way required by the Principle of Distinction. Machines of the future may be capable of some types of discrimination, but it is highly unlikely that they will have the judgment, reasoning capabilities or situational awareness that humans employ in making proportionality assessments. And accountability for mishaps or misuse is a major concern, as so many different groups would be involved in the production and deployment of autonomous weapons.

The US Department of Defense directive on “autonomy in weapons systems”(November 2012) that “once activated, can select and engage targets without further intervention by a human operator”, seeks to assures us that such weapons will be developed to comply with all applicable laws. But this cannot be guaranteed and it green lights the development of machines with combat autonomy.

The US policy directive emphasizes verification and testing to minimize the probability of failures that could lead to unintended engagements or loss of control. The possible failures listed include “human error, human-machine interaction failures, malfunctions, communications degradation, software coding errors, enemy cyber attacks or infiltration into the industrial supply chain, jamming, spoofing, decoys, other enemy countermeasures or actions, or unanticipated situations on the battlefield”.

How can researchers possibly minimize the risk of unanticipated situations? Testing, verification and validation are stressed without acknowledging the virtual impossibility of validating that mobile autonomous weapons will “function as anticipated in realistic operational environments against adaptive adversaries”. The directive fails to recognize that proliferation means we are likely to encounter equal technology from other powers. And as we know, if two or more machines with unknown strategic algorithms meet, the outcome is unpredictable and could create unforeseeable harm for civilians.

The bottom line is that weapon systems should not be allowed to make decisions to select human targets and engage them with lethal force. We need to act now to stop the kill function from being automated. We have already prohibited chemical weapons, biological weapons, blinding lasers, cluster munitions and antipersonnel landmines.

We now need a new international treaty to pre-emptively ban fully autonomous weapons.

I call on you to sign our call for a ban at http://icrac.net/ before too many countries develop the technology and we venture down a path from which there is no return.

Noel Sharkey is professor of artificial intelligence and robots at the University of Sheffield

Red Bull makes hydrogen fuel cell play with AVL

Formula 1 is an anachronistic anomaly where its only cutting edge is in engine development. The rules prohibit any real innovation and there would be...