The world’s first electronic autonomous mobile robots, Elmer and Elsie, were created by Dr William Grey Walter in Bristol, UK in 1948. Seventy years on, electronics shows like CES 2018 are full of them, but our homes and workspaces are not.

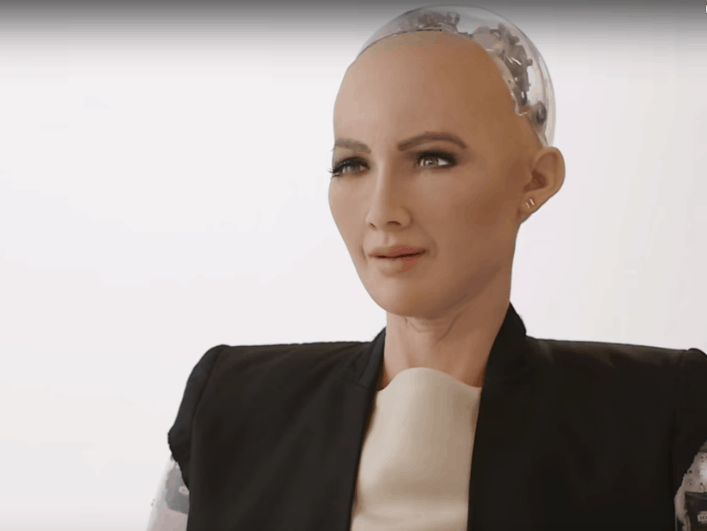

Already, we’ve seen table tennis-playing robots, laundry-folding robots …and an incredibly creepy robot (we’re looking at you Sophia), yet the pattern of autonomous robotic performances remains the same: lots of nice demos; little that works well in messy real-world situations.

Robots can do very clever things within known spaces, but systems get ‘lost’ if they move too fast or conditions aren’t perfect. Expensive technology can quickly look pretty stupid.

Robotics in the real-world, it transpires, isn’t easy: “Edge cases are the largest barrier to widespread adoption of this technology in the marketplace; uncommon and unpredictable events that make solving any problem significantly harder,” said Jorgen Pedersen, CEO of US robotics firm Re2 Robotics. “Ninety-nine per cent reliability isn’t high enough if you’re talking about a device potentially harming a person”.

Among the massive technical challenges surrounding autonomous robots, one of the biggest is computer vision.

In humans, vision encompasses an astonishing range of capabilities. We see colour, perceive motion, identify shapes, and can gauge size, distance and speed. On top of all that, we see in three dimensions (even though images fall onto the retina in two), fill in blind spots, and erase objects that cloud our view – even our own noses.

The biological apparatus that performs these tasks is by far the most powerful and complex of our sensory systems. In the brain, neurons devoted to visual processing number in the hundreds of millions and take up about 30 per cent of the cortex, as compared with three per cent for hearing.

Likewise, robot vision is less concerned with image capture, and more focused on cognition – what we generally regard as Artificial Intelligence. In short, robot vision is exceptionally complicated. The field includes, among other sub-domains: scene reconstruction, event detection, video tracking, object recognition, 3D pose estimation, learning, indexing, motion estimation, and image restoration.

Despite Dr Walter’s stellar work 70 years ago, getting from A to B successfully is still one of the biggest hurdles for autonomous mobile robots, and spatial cognition is a key requirement if they’re to improve. A Roomba might work ok by spiralling outwards from a fixed point, but any robot with grander goals needs to build good spatial awareness on the fly.

One innovation already helping deliver this is Simultaneous Localisation and Mapping (SLAM) algorithm design.

SLAM should be thought of more as a maths problem than a technology: “It’s really the most basic physical problem a robot encounters when it asks the question ‘Where am I?’” said Martin Rufli from IBM Research. SLAM algorithms turn data from various sensors into useful information that computers can use to understand their environment and their place within it – all from scratch and in near-real-time.

The majority of modern visual SLAM systems are based on tracking a set of points through successive camera frames and using these to triangulate their 3D position; while simultaneously using estimated point locations to calculate the camera pose that could have observed them. If computing position without first knowing location initially seems unlikely, finding both simultaneously looks like an impossible chicken and egg problem. In fact, by observing a sufficient number of points it is possible to solve for both structure and motion concurrently. By carefully combining measurements of points made over multiple frames it is even possible to retrieve accurate position and scene information with just a single camera.

Many devices, from robots to drones, and AR/VR to autonomous cars, already use some form of SLAM to navigate, but the technology is still in the very earliest stages of realising its potential according to Owen Nicholson, CEO of SLAMcore: “There’s no general solution available that allows an independent platform in an unknown environment to localise itself accurately, know where it is and perhaps, more importantly, know its velocity so it understands where it will be in the future.”

Nicholson’s last point is key because, at its heart, SLAM is an optimisation problem. The goal is to compute the best configuration of camera poses and point positions in order to minimise the difference between a point's tracked location and where it is expected to be: “Without that fundamental information, you won’t have a robot capable of necessary tasks like efficient path planning,” he stresses.

https://www.youtube.com/watch?time_continue=10&v=aqweYtdUTdI

SLAMcore, which span out from the Department of Computing at Imperial College London, is currently working on a commercial positioning product that will be available for licensing this year: “Our goal isn’t just to solve spatial cognition problems – we need to do so affordably,” Nicholson says. “Algorithmically, a lot of things are possible with expensive hardware; the challenge is to ensure they remain possible when you’re using cheap processors, cameras and inertial measurement units.”

Hardware costs will prove fundamentally important in shaping the future of autonomous robotics because the hardware defines the range of possible applications; the cheaper the systems, the broader the range. The SLAMcore team has experience with all three levels of SLAM: positioning, mapping and semantic understanding, but they can’t jump ahead to level three problems before levels one and two are solved. Eventually, they will help manufacturers build systems that can semantically identify objects in 3D and understand a useful range of associated properties.

So where are we likely to see the biggest real-world breakthroughs?

Pedersen thinks the roadmap here is actually pretty clear: “The biggest initial advances will be made in the three types of work at which robots already excel: the dull, the dirty, the dangerous,” he explains. Pedersen understands these areas well; his cutting-edge grasping systems are already implemented everywhere from bomb disposal to U.S. Air Force cockpits. “There are two markets in particular that are suffering from significant labour shortages and require a lot of dull, dirty and dangerous work: agriculture and construction,” he points out.

We’ve already seen automation introduced in tightly controlled environments such as factories. Similarly, a farmer’s field is private property – it can be sectioned off from other areas: “The introduction of technology in these spaces may not require the 99.99 per cent reliability you would require in homes or public spaces,” Pedersen notes.

But improved reliability will be required for large mobile systems like biped robots if they’re ever to make their way into consumers’ homes, and that’s still a way off. The main challenge is breaking down barriers between robot and human worlds: “If humans and robots can achieve a shared representation of objects, we can reason and understand each other, says Rufli. “Essentially, it’s about creating something like a phone app API; producing information that’s interoperable between our brains and the robots’”.

“We’re not looking at super intelligence, but seamless understanding of a space is key,” Rufli says. “Once you have formalised that, you can look at exploiting the many ways robot brains are so different from ours. For example, you can make a literal copy of a robot’s brain, allowing easy knowledge transfer; this in itself will lead to an explosion of capability.”

The ‘seamless understanding’ Rufli mentions requires us to overcome some seemingly basic issues like sensor calibration: “These are low level problems; it’s mind-boggling that we still struggle to calibrate cameras in 2018,” he says.

For all the work left to do, 2017 saw a real shift in market momentum: “I keep running into people who are building applications,” says Rich Walker, CEO of The Shadow Robot Company. “Business leaders are starting to see how robots will help them solve commercial problems. Building a robot used to be the whole challenge; now the paradigm is ‘Do you have a business model that works, and can it exploit the next-generation of robots?’”

2018 is unlikely to usher in a single elegant, integrated solution for true spatial cognition. Nevertheless, the sector is expected to grow from $4.12 million in 2017 to $14.29 billion by 2023. While the technology is still not sufficiently mature to replicate the complex brain systems required, Rufli believes we may not need to wait for that to happen: “The success of autonomous robotics won’t rely on a cognition system that can solve for everything on its own – we’re still a long way off that,” he proffers. “It will be a patchwork, exploiting infrastructure so that, all of a sudden, the requirements on SLAM and object recognition lower enough that we can build reliable systems.” Once that happens, Raibert’s assertion about the growth of robotics may yet come true. Just don’t expect it to happen this year.

April 1886: the Brunkebergs tunnel

First ever example of a ground source heat pump?