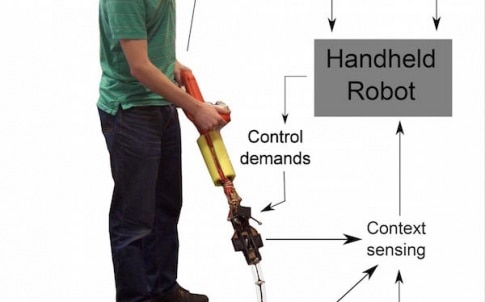

Handheld tools usually lack understanding about the task they are performing. Dr Walterio Mayol-Cuevas and PhD student Austin Gregg-Smith, from Bristol University’s Department of Computer Science, have been working on robot prototypes and how best to interact with a tool that ‘knows and acts’.

“For now we program the tasks manually as we are currently still exploring the way in which users who are already very capable and intelligent will interact and cooperate with a tool that has motion and also a degree of task knowledge,” Mayol-Cuevas told The Engineer via email.

“This is because users using our robots have essentially never experienced such shared cognition and actuation with a handheld tool before,” added Mayol-Cuevas, a reader in robotics, computer vision and mobile systems.

“Eventually we want to move to automated teaching of the tasks, perhaps by observation on how a skilled user does things.”

Compared with power tools that have a motor and some basic sensors, the handheld robots have been designed to have more degrees of motion to allow greater independence from the motions of the user, and are aware of the steps being carried out.

The team studied user performance on two tasks – picking and dropping objects to form tile patterns and aiming in 3D for simulated painting.

Three levels of autonomy were considered – no autonomy, semi-autonomous when the robot advises but does not act, and fully autonomous when the robot advises and acts by correcting or even refusing to perform incorrect actions.

“Overall, users preferred the increased level of help offered by the robots, and in some of the evaluated tasks a significant improvement on time to completion performance was observed,” Mayol-Cuevas said.

The team has designed and made two principal prototypes so far.

“We decided to use 3D printing technology as much as possible, and the drive mechanism is what is called cable-driven, which is similar to tendons. Servo motors actuate these tendons and make the robot move,” Mayol-Cuevas said.

“We are currently using motion capture, which means external cameras looking at markers on the robot and the objects it interacts with,” he added.

“This gives us the best accuracy and an ideal set-up for evaluating the interaction between the robot, the world and the user. Our ultimate aim is to embed all sensors onboard the tools so that they are self-contained and are used anywhere.”

The team is now considering tasks closer to real applications.

“Consider the task of cleaning in places such as hospitals, where one needs to ensure that all places and surfaces have been cleaned and or treated,” Mayol-Cuevas said.

“A handheld robot will keep track of the task progress and guide the user to ensure the task is done.

“The applications are vast, essentially anywhere where a handheld tool is currently used, such as agriculture, cleaning, construction and maintenance.”

Nanogenerator consumes CO2 to generate electricity

Whoopee, they've solved how to keep a light on but not a lot else.