Andrew Wade, senior reporter

Andrew Wade, senior reporter

This week DeepMind, the Google-owned company at the forefront of artificial intelligence research, revealed that it has successfully trained a neural network to learn sequentially.

While this may not sound particularly impressive, it represents a major step forward for AI and brings us one step closer to the holy grail/terrifying existential threat of artificial general intelligence (AGI), sometimes referred to as strong AI. In the past, neural networks have used machine learning to specialise in individual tasks, such as the AlphaGo program developed by DeepMind to compete at the board game Go. By initially learning from the moves of Go masters, then playing millions of games against itself, AlphaGo developed a highly specialised neural network capable of beating the world’s best human players.

However, if you asked AlphaGo to take on Gary Kasparov in a game of chess, it would have to start learning that game from scratch, permanently leaving behind its enormous Go knowledge. This is known in cognitive science as catastrophic forgetting, and has been widely acknowledged as a significant barrier for neural networks.

In contrast, humans learn incrementally, building our skill sets as we experience new things in our lives and using previous knowledge from other tasks to guide our decisions. Highly important skills such as motor function and language are strongly embedded in our brains via a process called synaptic consolidation. The more often a neural pathway fires, the less likely it is to be overwritten or forgotten. It’s this process that the team at DeepMind have sought to replicate.

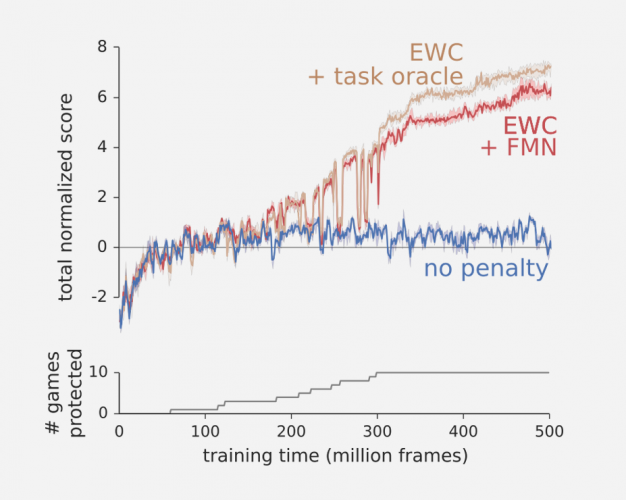

Known as Elastic Weight Consolidation (EWC), DeepMind’s new algorithm enables neural networks to ‘remember’ previous tasks by making it more difficult to overwrite the skills it deems most important. To test its effectiveness, the researchers used a programme called Deep Q-Network (DQN), which had previously mastered a series of Atari games from scratch without being taught the rules. However, DQN scrapped knowledge of each game once it progressed to the next. Now, the addition of the EWC algorithm has allowed the neural network to remember facets of each game, learning them sequentially and using knowledge from one to assist with others.

"Previously, DQN had to learn how to play each game individually," explains the research paper, which is published in the PNAS journal. "Whereas augmenting the DQN agent with EWC allows it to learn many games in sequence without suffering from catastrophic forgetting."

So, DeepMind has shown that the problem of catastrophic forgetting is not insurmountable, and in doing so has made a big leap forward in AI. However, this method of sequential learning is, for now, not a particularly effective way for computers to solve problems. EWC allowed DQN to remember how to play a range of Atari games, transferring knowledge from one game to another, but learning each game from scratch still brings about better results. The challenge for DeepMind now is to find out how EWC can be used to improve overall performance.

According to James Kirkpatrick, DeepMind’s lead researcher on the project, we’re still a long way from the type of AGI that can mimic the complex, layered intelligence exhibited by humans. But teaching neural networks to ‘remember’ is a major breakthrough, and takes us one step closer to the possibility of strong AI. Many prominent figures, including Stephen Hawking, Elon Musk and Bill Gates, have warned of the potential threat that AI poses to humanity, as well as its capacity to solve some of our biggest problems. As we travel along this uncertain path, we would do well to avoid some catastrophic forgetting of our own.

April 1886: the Brunkebergs tunnel

First ever example of a ground source heat pump?