The system uses advanced eye-tracking technology combined with fast piezoelectric actuators connected to an ‘artificial eye’.

‘We have a human operator who sees what the robot sees — he can control the head and eyes of the robot with his own head and eyes, and can interact through the robot in a kind of Wizard of Oz experience, sitting inside the robot,’ said Stefan Kohlbecher of the Ludwig-Maximilians University Munich.

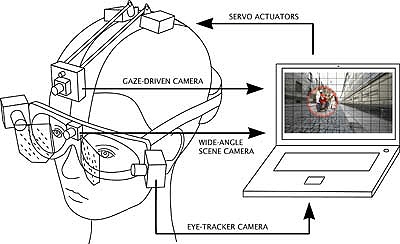

The current project stems from work done in 2008, where the team created a head-mounted eye-tracking system that feeds into and instructs the direction of a centre-mounted camera. Thus, wherever the wearer’s eyes look, the camera follows.

This in itself was a considerable challenge, however, as commercially available eye-tracking technology can usually record to a frequency of no more than 60Hz, which would create a slow, jumpy output. The team developed a mobile system that tracks eyes’ movements in three dimensions with a frequency of 600Hz using infrared detectors coupled with a mirror that reflects infrared wavelengths but lets visible light through.

‘This is how the detectors get a clear image of the eye and the pupil’s movements, but the wearer is not obstructed by the camera,’ Kohlbecher said.

Information from the eye tracking is then processed and fed into a camera that is controlled by piezo actuators that contain a special ceramic composite that changes shape upon an electric current. These react extremely quickly but are also lightweight, low noise and energy efficient.

The system achieves an overall latency — or delay between eye movement and camera movement — of just 10ms, creating a true point-of-view perspective. This has various applications in vision and cognition research, as well as more practical scenarios such as teaching surgical students where they should be looking during an operation.

Presenting their latest work at the Hannover Messe in Germany, the researchers unveiled a wireless adaptation of the system whereby an operator can sit at a computer screen wearing the headset, which communicates remotely with the piezo-controlled cameras on a robot, giving a real-time video feedback.

‘These are very natural controls, so it’s not as artificial as a keyboard and a mouse — you don’t need to learn it, you just know how it works,’ Kohlbecher said.

In a demonstration, the operator was able to ‘look around’ and explore the trade fair stands at Hannover — all through the robot. This could have applications in exploration or disaster relief, where it is too hazardous for humans to be.

But the ultimate goal of the research will be to create more realistic robots that respond to the suite of subtle social cues used by humans.

‘We’re using this to study human-robot interaction because, when I’m talking to you, a lot of information is communicated by gaze. Of course, in the end, we hope to get an autonomous system that can look at you and interpret your gaze.’

The work is a collaborative effort by Cognition for Technical Systems (CoTeSys), which comprises numerous German academic institutions and private investors.

JLR teams with Allye Energy on portable battery storage

This illustrates the lengths required to operate electric vehicles in some circumstances. It is just as well few electric Range Rovers will go off...