Given yesterday’s announcement from Farnborough – a future fighter jet that can deploy air launched ‘swarming’ Unmanned Air Vehicles – will we see a combination of capabilities that marry human and machine learning elements to create air lethality? DARPA, for example, appear keen on developing a similar idea with Gremlins, a programme that has challenged companies to investigate air-launched and air recoverable UAS.

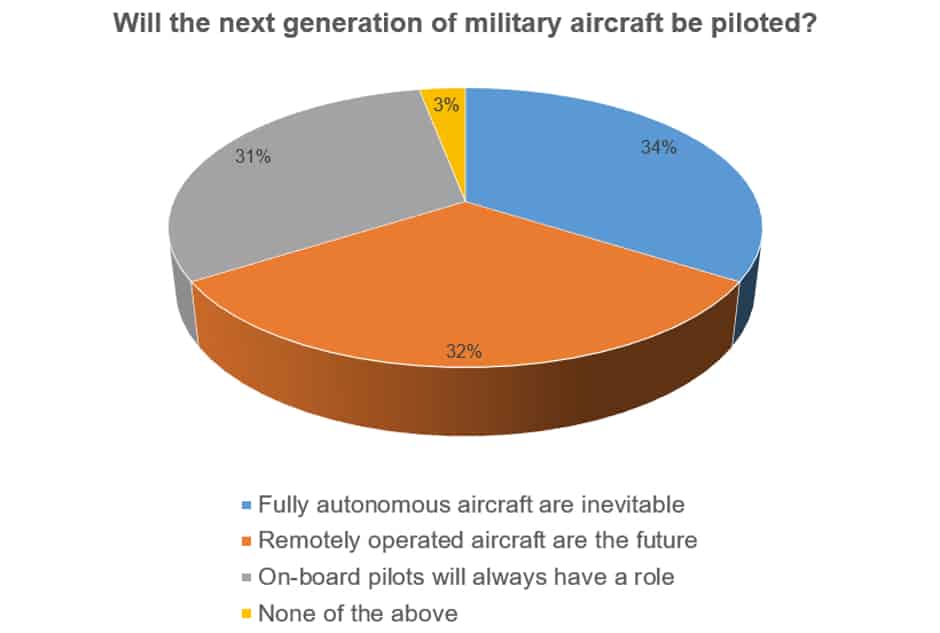

Back in the present, there wasn’t much separating the 477 poll participants, with 34 per cent agreeing that fully autonomous aircraft are inevitable, followed by 32 per cent who think future military aircraft will be remotely operated. Third place went to the 31 per cent who think pilots will remain in the cockpit of next-generation military aircraft, and the remaining three per cent chose ‘none of the above’.

In the comments that followed doubts were raised about autonomous systems being able to make the right decisions.

Former RAF serviceman David Anderson said: “Machines can only make decisions based on whatever ‘logic’ is programmed into them. The human pilot can apply judgement of all factors, including ‘gut instinct’ something that can never be programmed.”

This view was mirrored by Peter Thornhill who added: “All very plausible but AI cannot replace human intuition. Rather, I could see pilotless squadrons but overseen by a group of manned aircraft within the group to apply their interpretation when required. Whatever the outcome without the risk to the human life of the attacker I can see confrontations becoming more ruthless & merciless.”

Finally, but by no means least, John Patrick Ettridge said: “The problem with having a fully Autonomous weapons is that they rely on GPS and Wi-Fi to receive there instructions, so knock out the electronic signs and they do not work. There will always be a need for human control, even if from a “Mother Ship or command post” close to the action, with the Drones being the delivery of expendable ammunition.”

What do you think? Let us know using comments below.

Nanogenerator consumes CO2 to generate electricity

Whoopee, they've solved how to keep a light on but not a lot else.