The massive data centres that power today’s internet rely on banks of servers with microchips similar to those found in consumer electronics. But by building a chip to specifically address the issues that large servers face, the Princeton researchers believe they can significantly increase performance while at the same time reducing energy consumption.

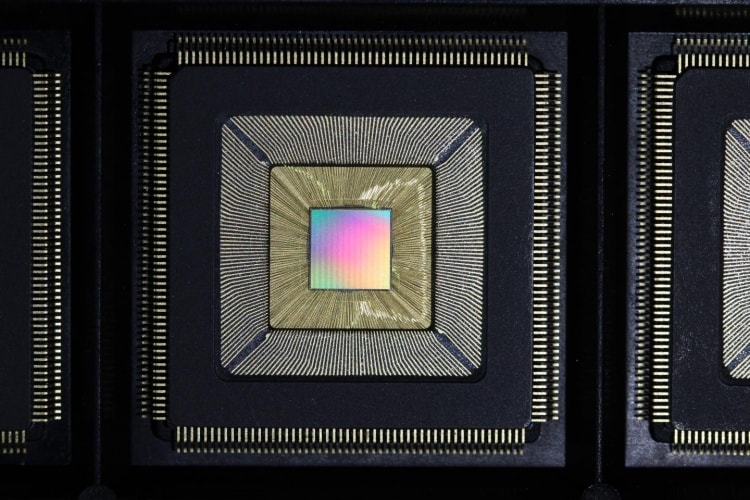

Named Piton, after the metal spikes used to aid mountain climbing, the chip has a scalable architecture, allowing thousands of individual units to be stitched together into a single system containing millions of cores.

"With Piton, we really sat down and rethought computer architecture in order to build a chip specifically for data centres and the cloud," said David Wentzlaff, an assistant professor of electrical engineering at Princeton’s Department of Computer Science. "The chip we've made is among the largest chips ever built in academia and it shows how servers could run far more efficiently and cheaply."

While the chip design was a collaborative effort from Princeton, it was actually built by IBM, with funding for the project coming from DARPA, the National Science Foundation, and the Air Force Office of Scientific Research. The current version of Piton measures six by six millimetres and contains over 460 million transistors, each of which are as small as 32 nanometres.

The vast majority of those transistors are housed in 25 cores, compared to the four or eight cores found in most consumer chip technology. According to Wentzlaff the scalable architecture of Piton could enable thousands of cores on a single chip, with half a billion cores in a data centre.

"What we have with Piton is really a prototype for future commercial server systems that could take advantage of a tremendous number of cores to speed up processing," he said.

Multi-core computing generally allows for faster processing, so long as the software being run is able to exploit the architecture correctly. Piton’s execution drafting, where similar processes are lined up one after another like drafting cyclists, can increase energy efficiency by about 20 per cent compared to a standard core. Its memory traffic shaper, where traffic is efficiently managed, can yield an 18 per cent performance jump.

Despite these impressive leaps, the Princeton team is making its design open source, sacrificing potential commercial gains so that the technology can permeate computing systems as quickly as possible.

"We're very pleased with all that we've achieved with Piton in an academic setting, where there are far fewer resources than at large, commercial chipmakers," said Wentzlaff. "We're also happy to give out our design to the world as open source, which has long been commonplace for software, but is almost never done for hardware."

Glasgow trial explores AR cues for autonomous road safety

They've ploughed into a few vulnerable road users in the past. Making that less likely will make it spectacularly easy to stop the traffic for...