This could be one of the results of a European research programme aimed at giving robots a clearer understanding of their environment with 3D camera vision.

The TACO (Three-dimensional Adaptive Camera with Object Detection and Foveation) project, which began in February, will last for 36 months and include European contributions from four research institutes, one university and UK industrial partners Shadow Robot Company and Oxford Technologies.

The technical leader of the project, Jens Thielemann from Norwegian research institute SINTEF, said their improved vision systems will be based on laser-scanning technology.

‘Laser scanners have a significant advantage with regard to other 3D sensing in that they only perform one depth measurement at a time,’ he added. ‘This significantly enhances signal-to-noise ratio providing more accurate information.’

Thielemann said the problem is that current laser scanners distribute measurement points equidistantly over the scene to be captured.

‘This means an object that is uninteresting for the robot will be sampled as equally dense as the object the robot is interacting with,’ he added.

‘For instance, in case of a robot navigating a hallway and trying to avoid obstacles, a normal laser scanner will spend the same amount of time sampling objects that are far away and pose no danger to the robot, as it will spend on those nearby that can pose more imminent danger.’

Thielemann said the TACO researchers will correct this by adapting the robot’s spatial and temporal resolution so that it mimics the processes carried out by the human eye.

He explained: ‘The central part of the eye has high spatial resolution, where as the peripheral part of the eye has low spatial resolution. The peripheral part of the vision is used for detecting objects of interest due to colour, motion, edges, structure and used to move the fovea towards the object of interest.

‘The TACO sensor will mimic this process by obtaining a coarse image of the scene, performing rapid image analysis to detect objects of interest and subsequently obtaining images with higher temporal and spatial resolution of the detected objects. These new images will again be used to detect new objects of interest.’

Thielemann said the robot’s vision system will include optics for emitting and detecting laser light measurement points and a micro-mirror for scanning the laser beam over a complete scene. All of this will be analysed by software designed for rapid object detection and environmental understanding.

With these enhancements made to laser scanning technology, it is believed robots will have a more human-like understanding of their surroundings and it will also allow robots to achieve accurate information about the distance to every point in the image - something not possible with human vision.

Thielemann added that this will be accomplished using time-of-flight measurements, which measures the time it takes for an emitted signal to travel through the air, reflect off the target object and be received and measured by the sensor.

‘This will be accomplished by emitting modulated laser light, a mix of multiple sine-waves, which are reflected by the target object,’ he said. ‘The reception optics, matched to the emission signal, measure the reflection and perform a phase-shift measurement of the emitted sine waves. This phase-shift can then be converted to an estimate of depth.’

According to Thielemann, initial demonstrations will be related to grasping and navigation.

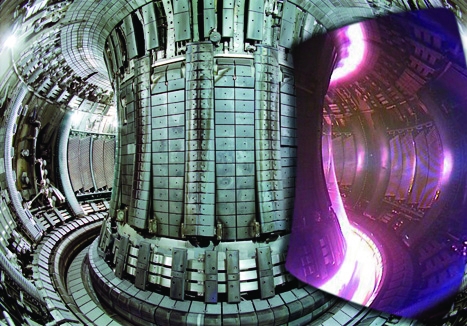

Oxford Technologies will look into the use of this in remote maintenance of industrial installations where humans cannot operate, such as the JET fusion reactor.

Shadow Robot will be experimenting with the use of the 3D vision sensor with the company’s patented robotic hand.

Ugo Cupcic, software engineer for Shadow Robot, said the plan is to put their robots through a series or household tasks.

‘The general set of tasks are real-life situations dealing with navigation and manipulation,’ he added. ‘The tasks include folding a set of towels to delivering a full cup of tea without spilling a drop.

‘We’re also looking into industrial applications, where for example, robots in this environment would benefit from being able to be more autonomous and navigate their environment while performing tasks.’

Project to investigate hybrid approach to titanium manufacturing

What is this a hybrid of? Superplastic forming tends to be performed slowly as otherwise the behaviour is the hot creep that typifies hot...