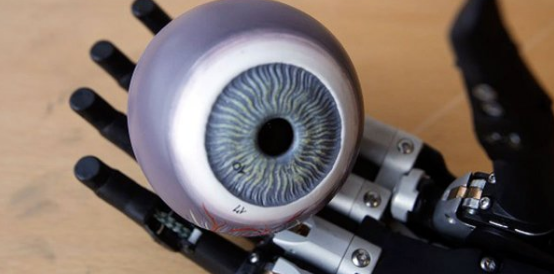

AI allows bionic hand to ‘see’ and grip

Biomedical engineers from Newcastle University have developed a computer vision system for prosthetic hands, allowing users to grasp and interact with common objects.

Current upper limb prosthetics that can grip are controlled by myoelectric signals from the muscles in the stump, but it’s a skill that takes patience and time to master. Funded by the EPSRC, the Newcastle team created a computer vision system that enables prosthetics to ‘see’ with the assistance of an off-the-shelf camera. The work appears in the Journal of Neural Engineering.

“Responsiveness has been one of the main barriers to artificial limbs,” said Dr Kianoush Nazarpour, senior lecturer in Biomedical Engineering at Newcastle University. “For many amputees the reference point is their healthy arm or leg, so prosthetics seem slow and cumbersome in comparison.”

“Using computer vision, we have developed a bionic hand which can respond automatically - in fact, just like a real hand, the user can reach out and pick up a cup or a biscuit with nothing more than a quick glance in the right direction.”

Register now to continue reading

Thanks for visiting The Engineer. You’ve now reached your monthly limit of news stories. Register for free to unlock unlimited access to all of our news coverage, as well as premium content including opinion, in-depth features and special reports.

Benefits of registering

-

In-depth insights and coverage of key emerging trends

-

Unrestricted access to special reports throughout the year

-

Daily technology news delivered straight to your inbox

Water Sector Talent Exodus Could Cripple The Sector

Maybe if things are essential for the running of a country and we want to pay a fair price we should be running these utilities on a not for profit...