MobilePoser puts motion capture into mobile devices

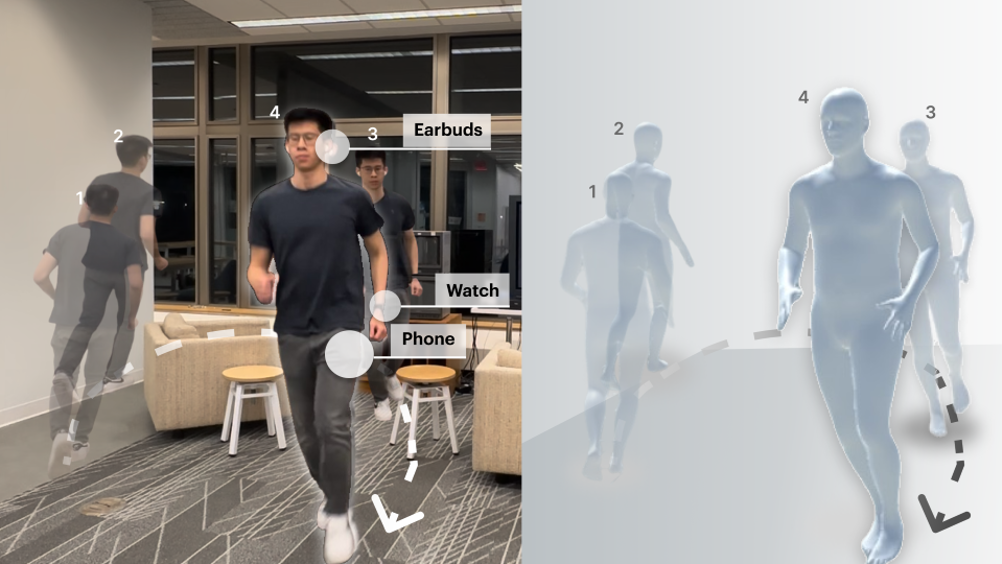

Northwestern University engineers have developed MobilePoser, a new system for full-body motion capture on a mobile device that removes the need for specialised rooms and expensive equipment.

MobilePoser is said to leverage sensors already embedded within consumer mobile devices, including smartphones, smart watches and wireless earbuds. Using a combination of sensor data, machine learning and physics, MobilePoser accurately tracks a person’s full-body pose and global translation in space in real time.

In a statement, study lead Karan Ahuja said: “Running in real time on mobile devices, MobilePoser achieves state-of-the-art accuracy through advanced machine learning and physics-based optimisation, unlocking new possibilities in gaming, fitness and indoor navigation without needing specialised equipment.

“This technology marks a significant leap toward mobile motion capture, making immersive experiences more accessible and opening doors for innovative applications across various industries.”

Ahuja’s team unveiled MobilePoser on October 15, 2024 at the 2024 ACM Symposium on User Interface Software and Technology in Pittsburgh, USA.

In the film making world, motion-capture techniques require an actor to wear form-fitting suits covered in sensors as they move around specialised sets. A computer captures the sensor data and then displays the actor’s movements and expressions.

Register now to continue reading

Thanks for visiting The Engineer. You’ve now reached your monthly limit of news stories. Register for free to unlock unlimited access to all of our news coverage, as well as premium content including opinion, in-depth features and special reports.

Benefits of registering

-

In-depth insights and coverage of key emerging trends

-

Unrestricted access to special reports throughout the year

-

Daily technology news delivered straight to your inbox

Water Sector Talent Exodus Could Cripple The Sector

Maybe if things are essential for the running of a country and we want to pay a fair price we should be running these utilities on a not for profit...