Neural signals decoded to control robotic arm

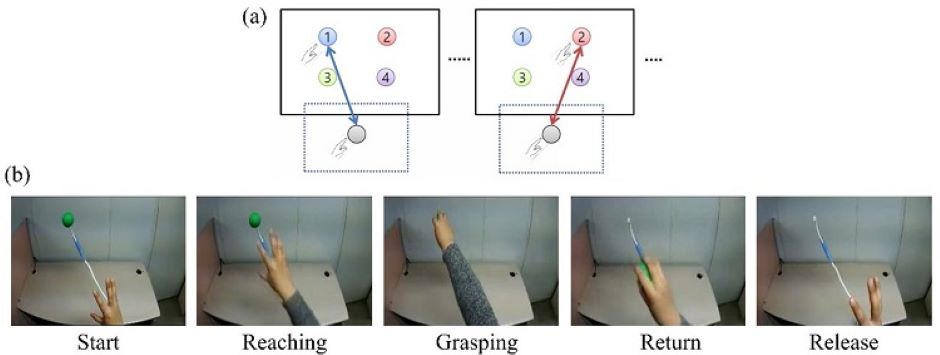

Researchers at the Korea Advanced Institute of Science and Technology have developed a mind-reading system that decodes neural signals from the brain during arm movement.

Described in Applied Soft Computing, the method can be used by a person to control a robotic arm through a brain-machine interface (BMI), which translates nerve signals into commands to control a machine.

Two main techniques monitor neural signals in BMIs, namely electroencephalography (EEG) and electrocorticography (ECoG).

EEG exhibits signals from electrodes on the surface of the scalp and is non-invasive, relatively cheap, safe and easy to use. EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG.

Register now to continue reading

Thanks for visiting The Engineer. You’ve now reached your monthly limit of news stories. Register for free to unlock unlimited access to all of our news coverage, as well as premium content including opinion, in-depth features and special reports.

Benefits of registering

-

In-depth insights and coverage of key emerging trends

-

Unrestricted access to special reports throughout the year

-

Daily technology news delivered straight to your inbox

Water Sector Talent Exodus Could Cripple The Sector

Well let´s do a little experiment. My last (10.4.25) half-yearly water/waste water bill from Severn Trent was £98.29. How much does not-for-profit Dŵr...