Developed by UK software firm rFpro, the ray tracing rendering technology is claimed to be the first to accurately simulate how a vehicle’s sensor system perceives the world. Automotive AI and autonomous systems are currently still heavily reliant on real-world testing for much of their training. Simulation that more accurately represents reality is seen as an important tool that will speed up the development of autonomous vehicles, allowing much more rapid progress in the sector.

“The industry has widely accepted that simulation is the only way to safely and thoroughly subject AVs and autonomy systems to a substantial number of edge cases to train AI and prove they are safe,” said Matt Daley, operations director at rFpro.

“However, up until now, the fidelity of simulation hasn’t been high enough to replace real-world data. Our ray tracing technology is a physically modelled simulation solution that has been specifically developed for sensor systems to accurately replicate the way they ‘see’ the world.”

According to rFPro, the ray tracing graphics engine is a superior fidelity image rendering system that sits alongside the company’s existing rasterization-based rendering engine. Rasterization simulates light taking single bounces through a simulated scene. This is sufficiently quick to enable real-time simulation and powers rFpro’s driver-in-the-loop (DIL) solution that is used across the automotive and motorsports industries.

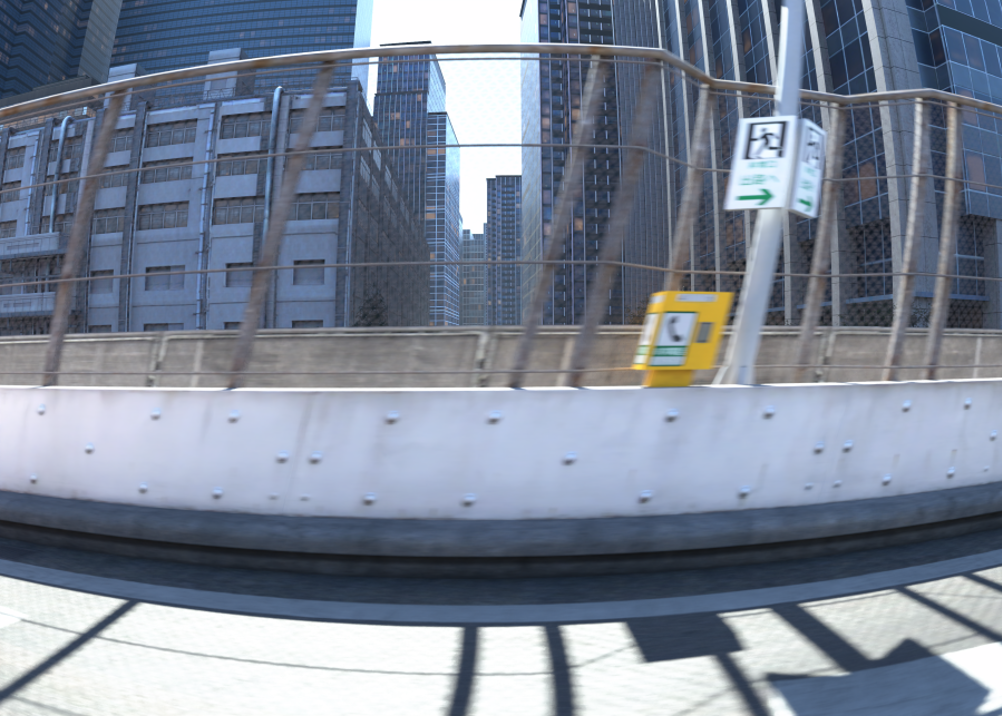

Ray tracing uses multiple light rays through the scene to accurately capture the nuances of the real world, reliably simulating the huge number of reflections that happen around a sensor. rFPro says this is critical for low-light scenarios or environments where there are multiple light sources to accurately portray reflections and shadows, for example in multi-storey car parks and illuminated tunnels with bright ambient daylight at their exits, or urban night driving under multiple street lights.

Modern HDR (High Dynamic Range) cameras used in the automotive industry capture multiple exposures of varying lengths of time. To simulate this accurately, rFpro has introduced its multi-exposure camera API. This ensures that the simulated images contain accurate blurring, caused by fast vehicle motions or road vibrations, alongside physically modelled rolling shutter effects.

“Simulating these phenomena is critical to accurately replicating what the camera ‘sees’, otherwise the data used to train ADAS and autonomous systems can be misleading,” said Daley. “This is why traditionally only real-world data has been used to develop sensor systems. Now, for the first time, ray tracing and our multi-exposure camera API is creating engineering-grade, physically modelled images enabling manufacturers to fully develop sensor systems in simulation.”

Poll: Should the UK’s railways be renationalised?

The term innovation is bandied about in relation to rail almost as a mantra. Everything has to be innovative. There is precious little evidence of...