Soft robotic gripper displays light touch with a twist

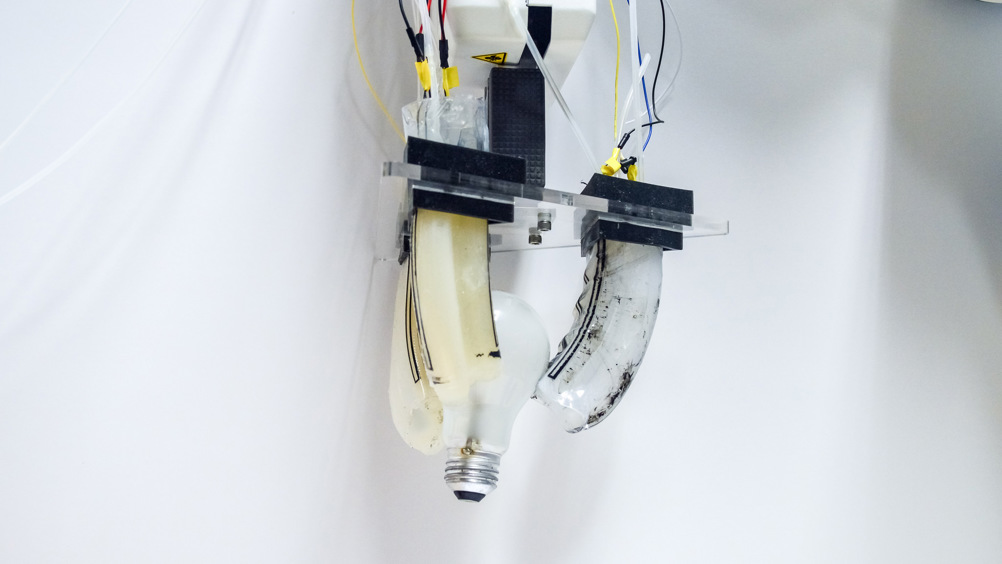

Engineers at the University of California San Diego have developed a soft robotic gripper capable of delicate tasks such as screwing in a light bulb.

The gripper consists of three fingers, each with three pneumatically-activated chambers, allowing multiple degrees of movement when air pressure is applied. In addition, each finger is covered with a smart, sensing skin made of silicone rubber embedded with conducting carbon nanotubes. Sheets of the rubber are slipped over the flexible fingers to cover them like skin.

As the fingers flex, the conductivity of the nanotubes changes, allowing the skin to record and detect when the fingers are moving and coming into contact with an object. The data the sensors generate is transmitted to a control board, which collates the information to create a 3D model of the object. According to the UC San Diego team, it’s a process similar to a CT scan, where 2D image slices from a 3D picture.

(Credit: UC San Diego)

Register now to continue reading

Thanks for visiting The Engineer. You’ve now reached your monthly limit of news stories. Register for free to unlock unlimited access to all of our news coverage, as well as premium content including opinion, in-depth features and special reports.

Benefits of registering

-

In-depth insights and coverage of key emerging trends

-

Unrestricted access to special reports throughout the year

-

Daily technology news delivered straight to your inbox

Water Sector Talent Exodus Could Cripple The Sector

Maybe if things are essential for the running of a country and we want to pay a fair price we should be running these utilities on a not for profit...