The poll was set in response to a report that suggested autonomous vehicles be introduced to roads, even if they are only marginally safer than humans.

According to a report from the RAND Corporation, thousands of lives could be saved over approximately 15 years if autonomous technology was widely introduced when it is just 10 per cent better than human drivers. Over a longer period, hundreds of thousands of lives could be saved over 30 years compared to waiting until the technology is at least 75 per cent better.

Report co-author Nidhi Kalra said: "If we wait until these vehicles are nearly perfect, our research suggests the cost will be many thousands of needless vehicle crash deaths caused by human mistakes. It's the very definition of perfect being the enemy of good."

The early introduction of vehicle autonomy would not, however, eliminate road deaths, and society may not accept the technology causing casualties, even if overall harm was reduced.

In light of this, we asked what approach should be taken when integrating autonomy into the UK road network.

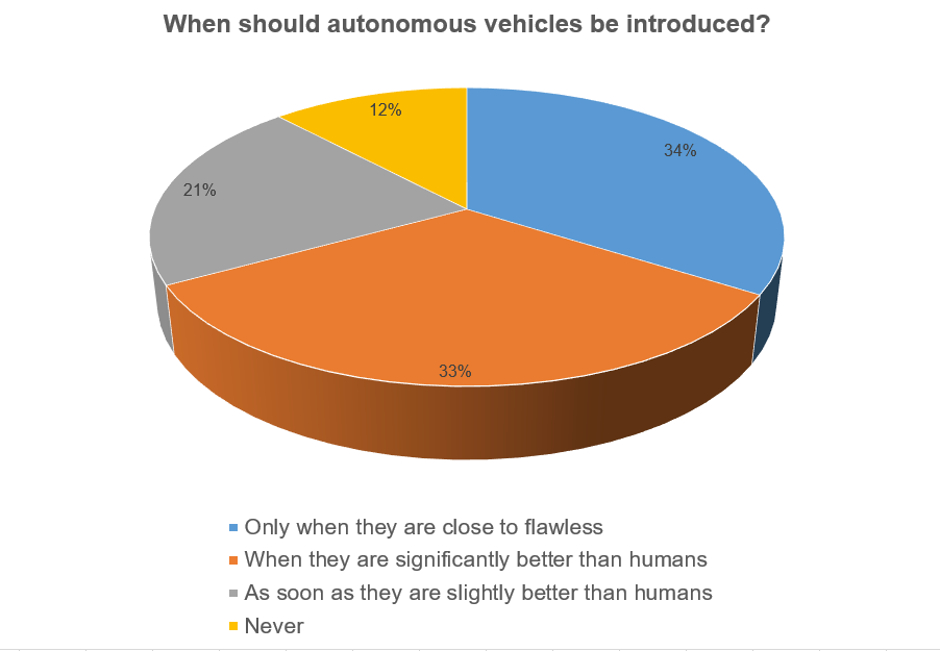

Just over a fifth (21 per cent) of respondents took the RAND Corporation view that autonomous vehicles be introduced when they are slightly better than humans, and 12 per cent said they should never be allowed on the roads.

The poll garnered 473 votes and of the remaining 67 per cent, 34 per cent took the view that autonomous vehicles be introduced when they are close to flawless, and 33 per cent agreed that they should be introduced when significantly better than humans.

In the debate that followed, suggestions were made about autonomous vehicles being made available for bad drivers, or for those motorists whose driving is impaired.

Mr Tillier said: ‘Autonomy needs to be used as soon as possible for the drivers who cannot operate the turn signals, who undertake at roundabouts, who exit roundabouts from straight the inside lane, who are not respecting safety distances, who are not respecting limits. The other drivers, who are driving properly or who show care, can remain on current cars.’

This view was echoed by RDutton, who said: ‘Roll-out should be accelerated for drivers that may be less safe than average, such as, the elderly, alcoholics, and drug users.’

Technology and insurance conundrums were also raised, with Steve asking: ‘In the scenario of a child running in front of a fast moving car, where the ‘software’ has to decide to either save the child or save the passenger, who is liable for the consequences of that decision?’

Addressing a number of factors around vehicle autonomy, Edward said: 'Autonomous vehicles will arrive on our roads at some point but it is a complex issue which has some fairly serious consequences if done wrongly.

‘Firstly, autonomous cars need to be proven to operate safely. Currently there is a lot of testing underway but safe operation has not yet been verified. Indeed, there have been some fairly serious failures in tests so there is clearly some way to go yet.

‘Secondly, once introduced there will be a long period when there is a mix of autonomous and conventional vehicles on the road. A big factor which needs to be understood (it currently isn’t) is how drivers will react when sharing the roads with autonomous vehicles.

‘Thirdly, there will need to be rulings regarding who is responsible when the inevitable accident involving an autonomous vehicle takes place. Is it the owner, the manufacturer or the software company who bears the responsibility for the autonomous vehicle? Car insurance is mandatory for very good reasons and will be required for autonomous vehicles but where the liability sits will be an interesting discussion.’

What do you think? Let us know in Comments below.

IEA report claims batteries are ‘changing the game’

It is important in trucks and other commercial vehicles as the payload will be reduced if the battery weight is penal. Battery applications in the...