The military robot is on the rise. Indeed, according to the latest figures from the International Federation of Robotics (IFR), defence applications accounted for 40% of the total number of service robots sold last year.

Many of the robots used by the defence sector are relatively benign. A large number of devices are, for instance, used for IED detection or bomb disposal. But, in a technological trend that’s the cause of much ethical hand-wringing, an increasing number of them are being used for offensive purposes.

For military strategists, robot warriers represent an opportunity to put troops out of harm’s way and potentially attack targets with far greater precision, thereby reducing civilian casualties. Whilst for the engineers engaged in their development, the defence sector represents a tantalising proving ground for the technology and one of the few routes to market.

But many are deeply concerned about the implications of the rise of the robot. And it’s not just because their fears have been stoked by Hollwood. The increasingly popular US military strategy ofusing “drones” to carry out targeted killings is, for many, a striking example of why we should be worried about relying too heavily on robots to do our dirty work. Despite denials from Washington, accusations are growing that US drones are responsible for large numbers of civilian casualties, a concern that is backed up by a detailed report published this summer by Stanford and New York Universities.

We’re still a long way away from the so-called Terminator scenario - although the fact that top experts, such as those gathered at this week’s Military Robotics conference in London now regularly talk in po-faced terms about fleets of robot soldiers - is a sign that the gap between science fiction and real life is getting narrower.

And while UAVs represent the most significant deployment of armed robots, the pace of technological development in the research and development community is astonishing.

Leading the charge is DARPA, the US defence department’s advanced research agency, which is driving the development of a range of advanced military robotic systems. Earlier this autumn,DARPA launched its robotic challenge, a competition set up to fast track and stimulate the development of defence robots able to perform human tasks. At the heart of the competition is a company we’ve featured before in The Engineer, Boston Dynamics, whose Big Dog robot can be seen in action here.

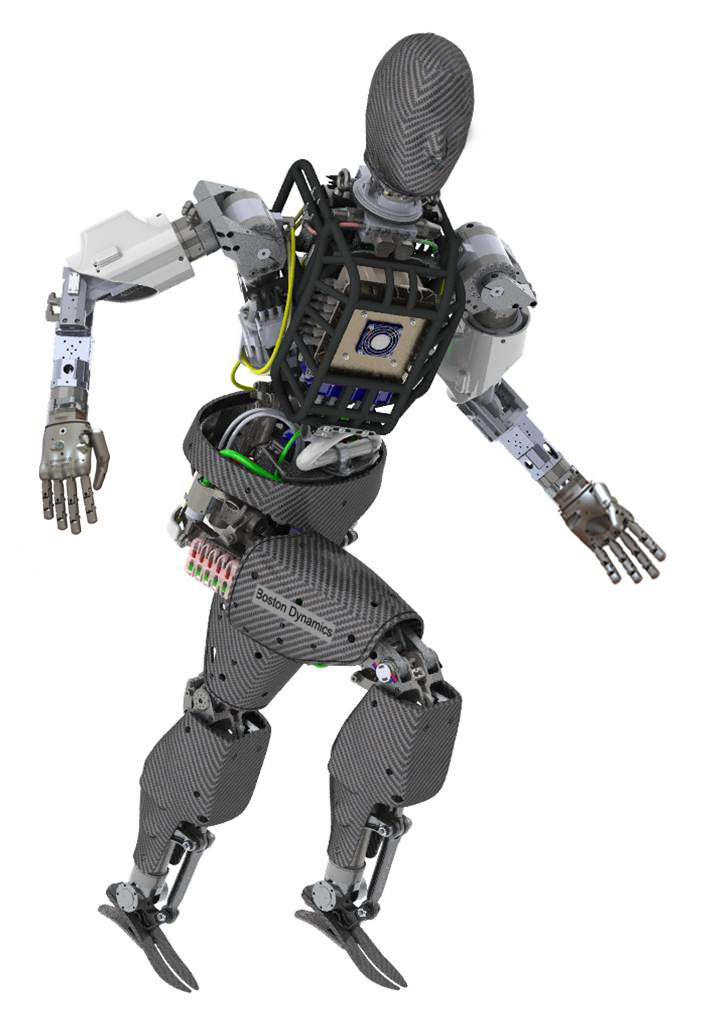

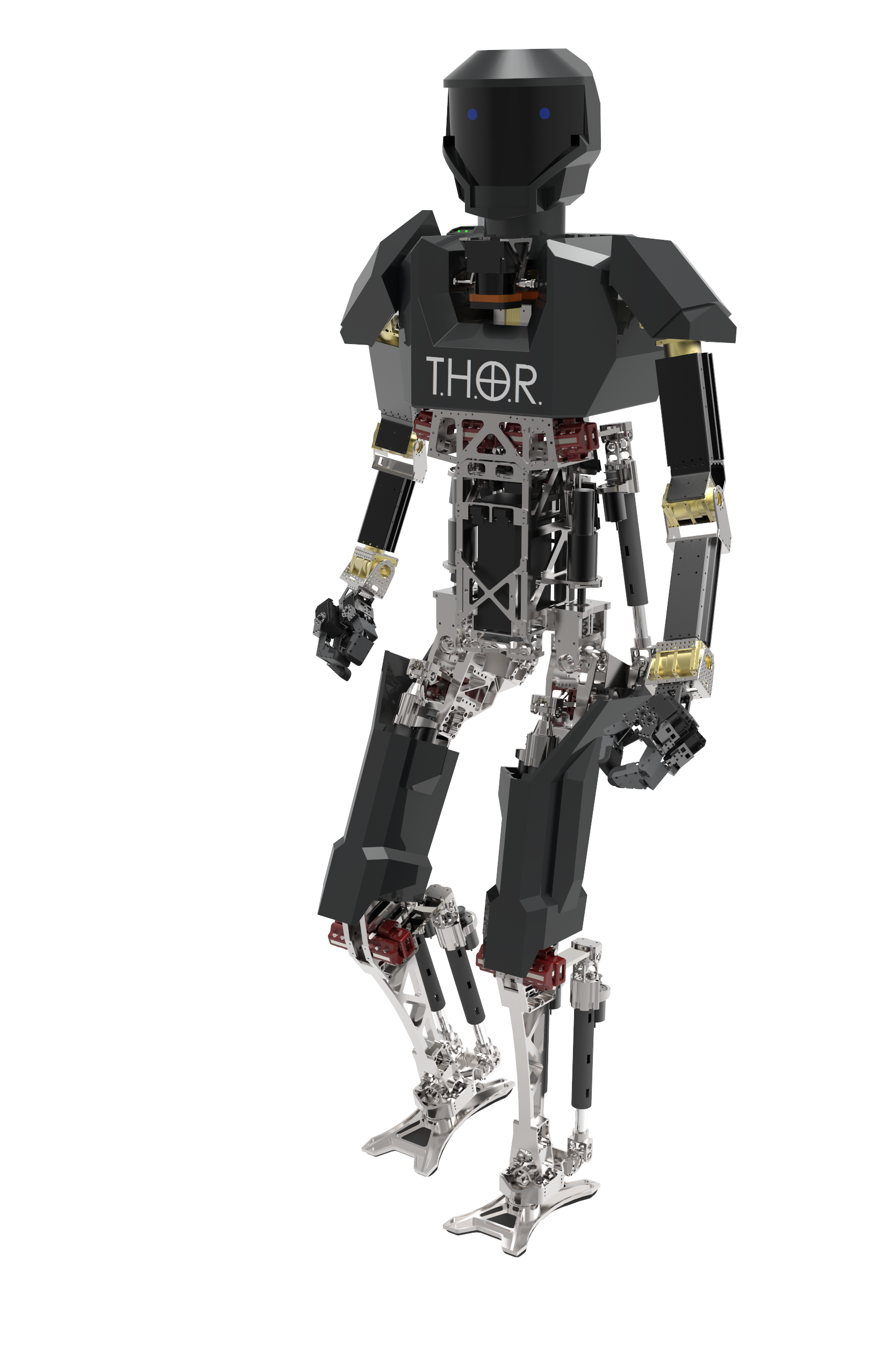

As part of the current competition, the firm is now working on the development of ATLAS, an autonomous humanoid robot that will be used as a platform for other competitors to test software and artificial intelligence systems. You can view a video of the latest iteration of ATLAS here. Other robots being developed through the competition include Raytheon’s Guardian system - a lightweight humanoid robot - and Virginia Tech’s THOR (Tactical Hazardous Operations Robot).

It’s fair to say the systems being developed through the competition will do little to calm the nerves of those terrified and concerned by the prospect of science-fiction becoming fact.

But it’s also worth remembering that many of our greatest technical leaps - from the development of nuclear energy, to the invention of the jet engine - have sprung from the incubator of military necessity. And while few us would regard the rise of robot soldiers without a shiver of distaste, the script - unlike that of a Hollywood movie - is not yet written. In conquering the technical challenges of operating robots in a warzone, engineers are making fundamental breakthroughs that could one day benfit mankind in a host of more benign appications.

Are you worried about military robots? Vote in our online poll

Water Sector Talent Exodus Could Cripple The Sector

Maybe if things are essential for the running of a country and we want to pay a fair price we should be running these utilities on a not for profit...