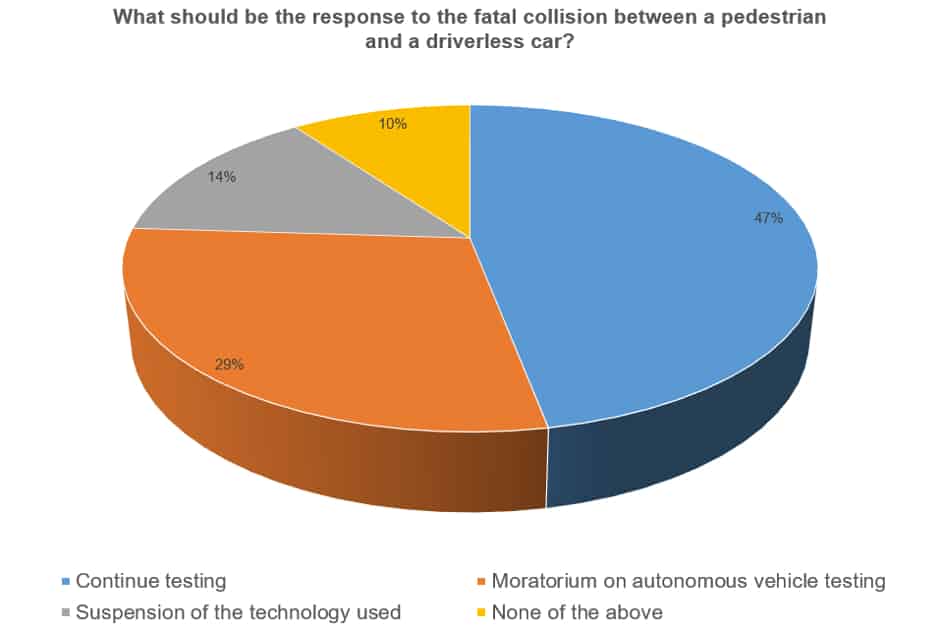

Last week’s poll: responses to Uber crash

The fatal collision that caused the death of a pedestrian in Tempe, Arizona on March 18, 2018 has led some in the US to call for a moratorium autonomous vehicle testing.

Elaine Hertzberg was struck by a driverless vehicle being tested by taxi hailing app company Uber. Hertzberg was crossing a road away from a designated and illuminated pedestrian crossing at around 10pm when the collision occurred with a Volvo XC90 SUV that had a human observer behind the wheel. The vehicle was travelling at 38mph in a 35mph zone and did not attempt to brake.

The US Consumer Watchdog organisation called for a national moratorium on autonomous vehicle testing, saying that “there should be a national moratorium on all robot car testing on public roads until the complete details of this tragedy are made public and are analysed by outside experts.”

Following the accident, Uber suspended autonomous vehicle trials in all North American cities while an investigation takes place. The company had been testing in Pittsburgh since 2016 and was also carrying out trials in San Francisco, Toronto and the Phoenix area, which includes Tempe. Arizona Governor Doug Ducey has since suspended Uber’s autonomous vehicle testing program.

Register now to continue reading

Thanks for visiting The Engineer. You’ve now reached your monthly limit of news stories. Register for free to unlock unlimited access to all of our news coverage, as well as premium content including opinion, in-depth features and special reports.

Benefits of registering

-

In-depth insights and coverage of key emerging trends

-

Unrestricted access to special reports throughout the year

-

Daily technology news delivered straight to your inbox

Water Sector Talent Exodus Could Cripple The Sector

Maybe if things are essential for the running of a country and we want to pay a fair price we should be running these utilities on a not for profit...