In recent weeks, Google cancelled its contract with the US Department of defence to develop a machine vision system known as Project Maven, which would analyse imagery captured by military drones to detect vehicles and other objects, track their motion and provide data to the military. The company’s employees signed en masse a letter to the company’s chief executive, Sundar Pichai, stating that such a project would outsource the moral responsibility for the application of their work to a third party, and that they were not prepared to countenance Google or its contractors building what they referred to as “warfare technology”.

Google’s repositioning is not the first example of pressure being applied to stop AI from being developed for military applications. The Engineer reported in April that a group of academics had threatened to boycott the Korea Advanced Institute of Science and Technology (KAIST) if it were to develop autonomous weapons that could locate and eliminate targets without human control. Whether or not the institution had planned to carry out such research, its president soon delivered an assurance that it would not and the boycott was called off. Moreover, Amazon recently called off plans to sell facial recognition software to US police after civil rights organisations expressed concerns that communities such as people of colour and immigrants could be targeted by such systems. We have also reported on several occasions on campaigns against “killer robots” such as those headed by Prof Noel Sharkey.

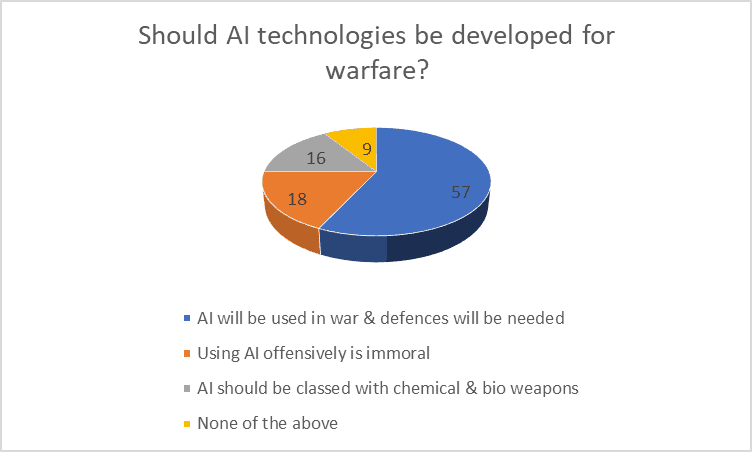

In our poll on the subject last week, 410 readers registered their opinion. Of these, a clear majority – 57 per cent – took the pragmatic view that AI will inevitably be used in war and that defences are needed. Notably, 18 per cent said that it was always immoral to use AI offensively, and a similar number – 16 per cent – said that AI should be classed with chemical and biological weapons. The smallest proportion of respondents – 9 per cent – declined to pick one of our options.

The comment section was typically lively, with 21 contributions. Many of these took issue with the composition of the poll, complaining that it asked the wrong question or that the options were inadequate. A typical response came from Tony Scales: “What is meant by ‘AI Technologies’? Types of AI tech are already in the game and have been for years. We need a more ‘intelligent’ debate about what constitutes AI tech and how it can, may, has, should be & should not be used. AI is easily conflated with autonomous systems.” Toby Walsh said: “This is a terrible poll. It asks the wrong question. There is a global campaign to ban lethal autonomous weapons without meaningful human control. But no one of the options in the poll lets you support that AI can be used by military but only provided there is meaningful human control.”

Of the more constructive comments, Ian Buglass noted: “AI is/has already been weaponised. That particular horse has bolted, and the stable door has fallen off its hinges. The knowledge, the raw information and the hardware to implement weaponised AI is already freely available. It’s only a matter of time before the less desirable elements present in our midst catch on to the fact that they can further their own ends with tenfold efficiency using published research results.”

Roger Twelvetrees added: “There is already a fair amount of what could be described as AI in sea mines and “fire and forget” missiles, and I don’t expect that developments of those systems will cease, so development of AI for weapons will inevitably continue.”

A reader using the name 1st Watch noted: “Of course we should develop them and aim to be at the fore of their development. If we don’t act proactively possible rogues will certainly develop them AND will probably use them, leaving us at a definite disadvantage should we be attacked.” Chris Elliott pointed out that there is a positive side to robot soldiers: “Robots don’t get angry and commit warcrimes because they saw their mates blown up by an IED yesterday. Robots can be instructed to sacrifice themselves if they are not sure whether the person approaching is hostile; humans have to be allowed to act in self-defence if there is doubt.”

Please continue to send us your opinions on this subject.

Poll: Should the UK’s railways be renationalised?

The term innovation is bandied about in relation to rail almost as a mantra. Everything has to be innovative. There is precious little evidence of...