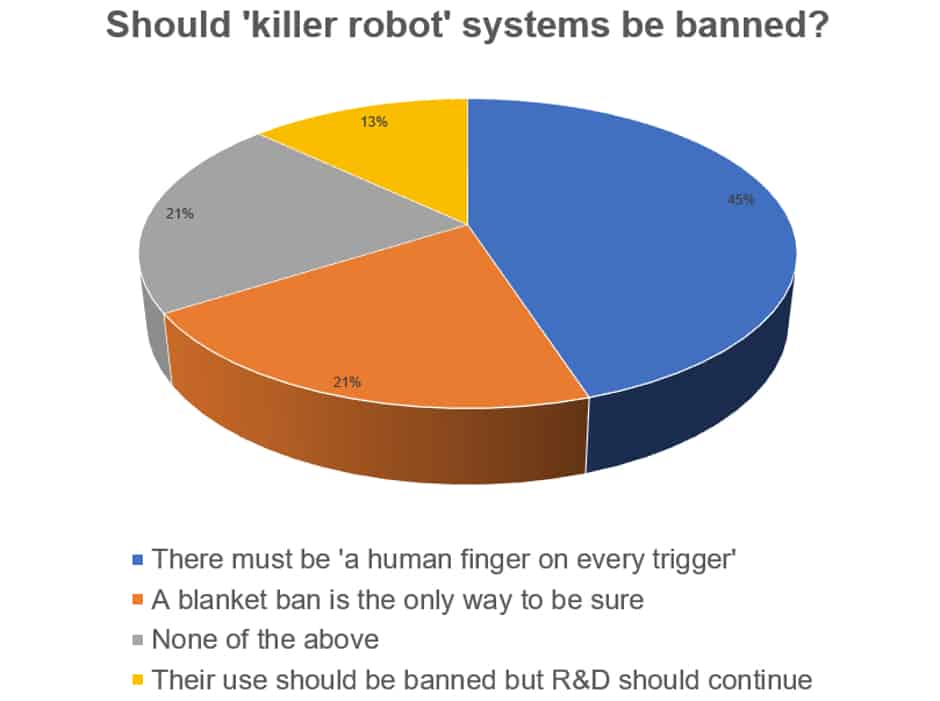

Last week’s poll: “killer robots” and AI in warfare

From the earliest spears to the development of the longbow and beyond, humans have designed their projectile weapons to maximise lethality whilst keeping as far away from the target as possible.

With this logic in mind, it seems inevitable that moves are afoot to create autonomous robotic weapons systems that can go about the terrifying and ugly of business of warfare whilst minimising risks to human combatants.

This seemed to be the case over in the US where a proposed upgrade to the Advanced Targeting and Lethality Automated System (or Atlas, which assists gunners on ground combat vehicles with aiming) would’ve seen the system “acquire, identify, and engage targets” faster.

As noted by The Engineer, the US Army then backtracked this initial announcement to emphasise the role of humans in the system and its adherence to directive 3000.09, a US Department of Defense set of guidelines ‘designed to minimise the probability and consequences of failures in autonomous and semi-autonomous weapon systems that could lead to unintended engagements’.

The military directive joins International Humanitarian Law and other safeguards brought about to protect civilians in armed conflict, which is discussed by Prof Noel Sharkey in an Engineer post titled ‘Say no to killer robots’ published in 2013.

Register now to continue reading

Thanks for visiting The Engineer. You’ve now reached your monthly limit of news stories. Register for free to unlock unlimited access to all of our news coverage, as well as premium content including opinion, in-depth features and special reports.

Benefits of registering

-

In-depth insights and coverage of key emerging trends

-

Unrestricted access to special reports throughout the year

-

Daily technology news delivered straight to your inbox

Water Sector Talent Exodus Could Cripple The Sector

Maybe if things are essential for the running of a country and we want to pay a fair price we should be running these utilities on a not for profit...