Robots make quick grab for objects in complex environments

Roboticists in Australia have developed what they say is a faster and more accurate way for robots to grasp objects, including those found in cluttered and changing environments.

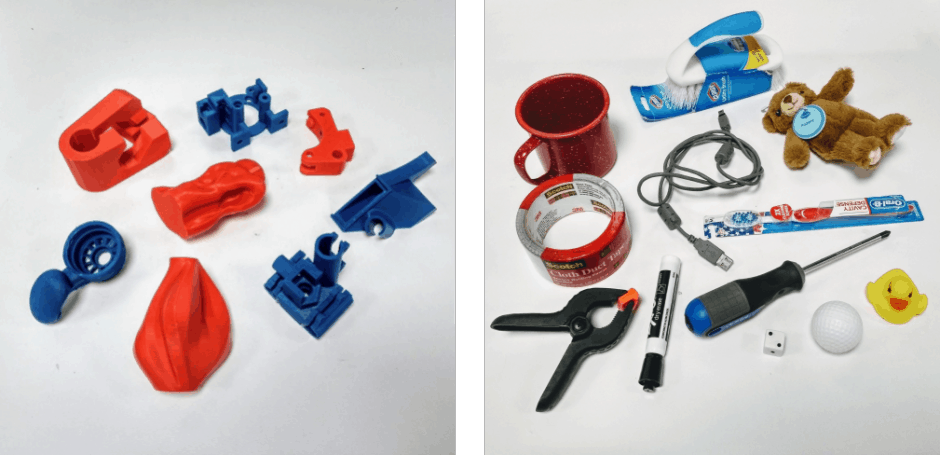

The new approach from Queensland University of Technology allows a robot to quickly scan the environment and map each pixel it captures to its grasp quality using a depth image. In tests, the approach - which is based on a Generative Grasping Convolutional Neural Network - achieved accuracy rates of up to 88 per cent for dynamic grasping and up to 92 per cent in static experiments.

QUT’s Dr Jürgen Leitner said while grasping and picking up an object was a basic task for humans, it had proved incredibly difficult for machines.

“We have been able to program robots, in very controlled environments, to pick up very specific items. However, one of the key shortcomings of current robotic grasping systems is the inability to quickly adapt to change, such as when an object gets moved,” he said. “The world is not predictable – things change and move and get mixed up and, often, that happens without warning – so robots need to be able to adapt and work in very unstructured environments if we want them to be effective.”

Register now to continue reading

Thanks for visiting The Engineer. You’ve now reached your monthly limit of news stories. Register for free to unlock unlimited access to all of our news coverage, as well as premium content including opinion, in-depth features and special reports.

Benefits of registering

-

In-depth insights and coverage of key emerging trends

-

Unrestricted access to special reports throughout the year

-

Daily technology news delivered straight to your inbox

Water Sector Talent Exodus Could Cripple The Sector

Maybe if things are essential for the running of a country and we want to pay a fair price we should be running these utilities on a not for profit...