With this logic in mind, it seems inevitable that moves are afoot to create autonomous robotic weapons systems that can go about the terrifying and ugly of business of warfare whilst minimising risks to human combatants.

This seemed to be the case over in the US where a proposed upgrade to the Advanced Targeting and Lethality Automated System (or Atlas, which assists gunners on ground combat vehicles with aiming) would’ve seen the system “acquire, identify, and engage targets” faster.

As noted by The Engineer, the US Army then backtracked this initial announcement to emphasise the role of humans in the system and its adherence to directive 3000.09, a US Department of Defense set of guidelines ‘designed to minimise the probability and consequences of failures in autonomous and semi-autonomous weapon systems that could lead to unintended engagements’.

The military directive joins International Humanitarian Law and other safeguards brought about to protect civilians in armed conflict, which is discussed by Prof Noel Sharkey in an Engineer post titled ‘Say no to killer robots’ published in 2013.

Concerns are such that 26 national governments support the Campaign to Stop Killer Robots, which seeks an international treaty banning fully autonomous AI in lethal weapon systems for reasons that include a propensity for war if undertaken by machines.

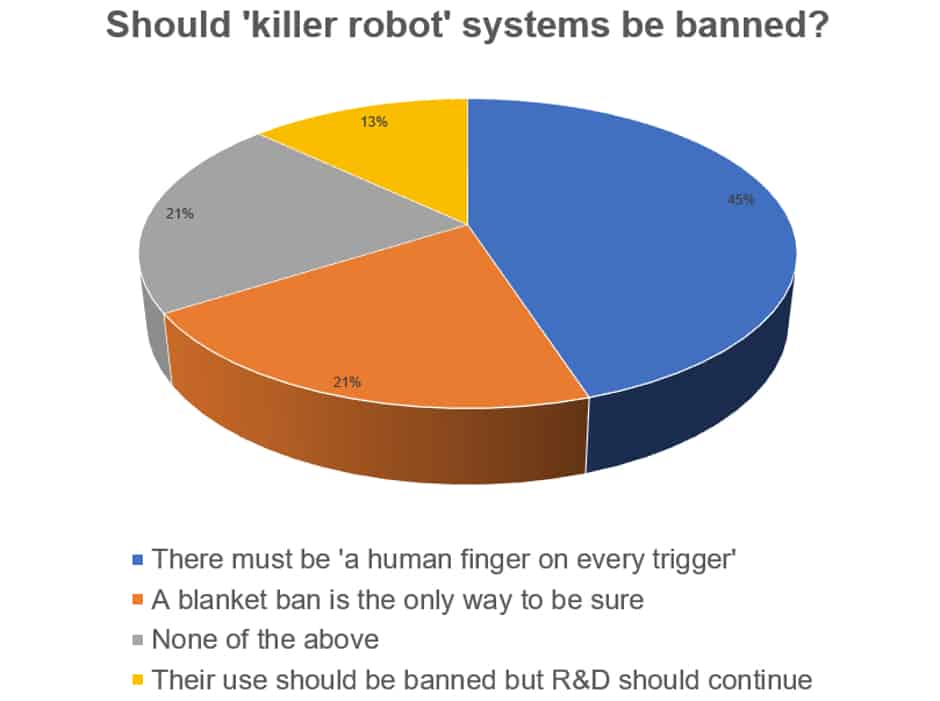

Humans exist in a perpetual state of conflict, but does that excuse the development of ‘killer robots’? Not for 45 per cent of respondents to last week’s poll who believe there must be ‘a human finger on every trigger’. A total of 13 per cent of respondents agree – like the Chinese government – that their use should be banned but R&D should continue. Just over a fifth (21 per cent) said there should be a blanket ban and the same number of respondents opted for none of the above.

What do you think? Join the debate in Comments below, but familiarise yourself with our guidelines for the content of comments before submitting.

Poll: Should the UK’s railways be renationalised?

The privatised rail service has always been propped up by public subsidy, so that companies could take their profits, instead of re-investing revenue...